Neural networks are computational models inspired by the human brain’s architecture and functioning. They consist of interconnected nodes, or neurons, that process information in a manner akin to biological neural networks. Each neuron receives input, processes it through an activation function, and passes the output to subsequent neurons.

This structure allows neural networks to learn from data by adjusting the weights of connections based on the input they receive and the output they produce. The fundamental building block of a neural network is the perceptron, which is a simple model that can classify inputs into binary outputs. However, modern neural networks are far more complex, often comprising multiple layers of neurons, which enables them to capture intricate patterns in data.

The learning process in neural networks is typically supervised, meaning that the model is trained on labeled datasets where the desired output is known. During training, the network makes predictions based on its current weights and compares these predictions to the actual outputs. The difference between the predicted and actual outputs is quantified using a loss function, which guides the optimization process.

Through techniques such as backpropagation, the network adjusts its weights to minimize this loss, gradually improving its accuracy. This iterative process allows neural networks to generalize from training data and make predictions on unseen data, a crucial aspect of their functionality.

Key Takeaways

- Neural networks are a type of machine learning model inspired by the human brain, consisting of interconnected nodes that process and analyze data.

- Deep learning is a subset of neural networks that uses multiple layers to extract higher-level features from raw data, enabling more complex and accurate predictions.

- The architecture of neural networks includes input and output layers, hidden layers, and various activation functions, which determine how data is processed and transformed.

- Training and optimization in deep learning involve adjusting the network’s parameters and minimizing errors through techniques like backpropagation and gradient descent.

- Neural networks have diverse applications in industries such as healthcare, finance, and automotive, revolutionizing processes like disease diagnosis, fraud detection, and autonomous driving.

The Role of Deep Learning in Neural Networks

How Deep Learning Models Work

This depth enables deep learning models to learn hierarchical representations of data, where lower layers capture simple features and higher layers combine these features into more abstract concepts. For instance, in image recognition tasks, early layers might detect edges and textures, while deeper layers identify shapes and objects.

The Rise of Deep Learning

The rise of deep learning has been fueled by advancements in computational power and the availability of vast amounts of data. Graphics Processing Units (GPUs) have become essential for training deep learning models due to their ability to perform parallel computations efficiently. Additionally, large-scale datasets from sources such as social media, online transactions, and sensor data have provided the necessary fuel for training these complex models.

Successes and Advantages of Deep Learning

As a result, deep learning has achieved remarkable success in various domains, including computer vision, natural language processing, and speech recognition. Its ability to automatically extract features from raw data without extensive manual feature engineering distinguishes it from traditional machine learning approaches.

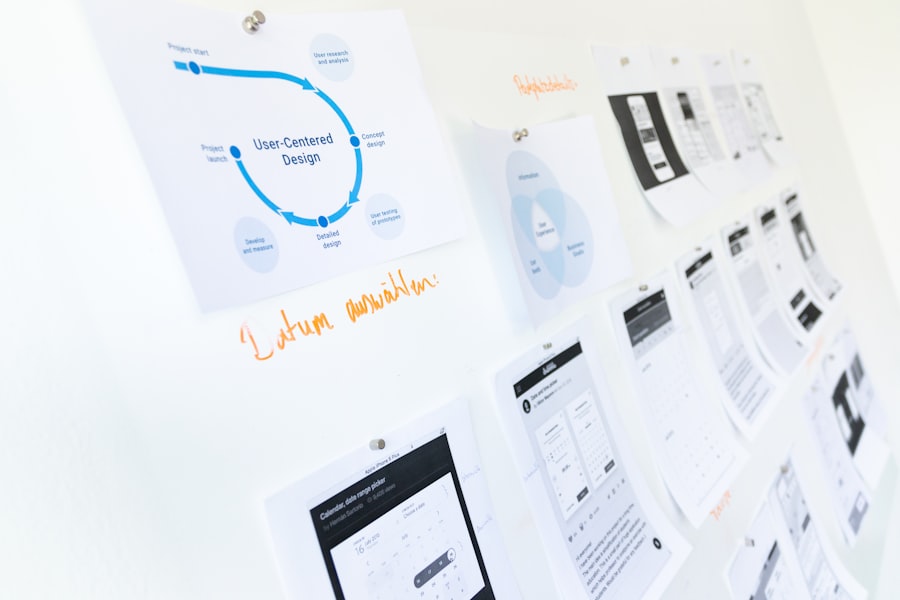

Exploring the Architecture of Neural Networks

The architecture of a neural network refers to its structure, including the number of layers, the number of neurons in each layer, and how these layers are connected. A typical architecture consists of an input layer, one or more hidden layers, and an output layer. The input layer receives raw data, while the hidden layers perform computations and transformations on this data.

The output layer produces the final predictions or classifications based on the processed information. Each layer is composed of neurons that apply activation functions to their inputs, introducing non-linearity into the model and enabling it to learn complex relationships. Common activation functions include the sigmoid function, hyperbolic tangent (tanh), and Rectified Linear Unit (ReLU).

The choice of activation function can significantly impact a network’s performance; for instance, ReLU has become popular due to its ability to mitigate the vanishing gradient problem often encountered with sigmoid and tanh functions in deep networks. Additionally, various architectural designs have emerged to address specific tasks. Convolutional Neural Networks (CNNs) are particularly effective for image processing tasks due to their ability to capture spatial hierarchies through convolutional layers.

Recurrent Neural Networks (RNNs), on the other hand, are designed for sequential data processing, making them suitable for tasks like language modeling and time series prediction.

Training and Optimization in Deep Learning

Training a neural network involves adjusting its weights based on input data and corresponding labels to minimize prediction errors. This process typically employs optimization algorithms such as Stochastic Gradient Descent (SGD) or its variants like Adam and RMSprop. These algorithms iteratively update the weights by calculating gradients of the loss function with respect to each weight.

The gradients indicate how much each weight should be adjusted to reduce the loss, guiding the optimization process toward a local minimum. One critical aspect of training is managing overfitting, where a model learns noise in the training data rather than generalizable patterns. Techniques such as dropout regularization, where random neurons are temporarily removed during training, help mitigate overfitting by forcing the network to learn redundant representations.

Additionally, early stopping can be employed; this technique involves monitoring validation loss during training and halting when performance begins to degrade on unseen data. Hyperparameter tuning is another essential component of training; parameters such as learning rate, batch size, and number of epochs can significantly influence model performance and require careful experimentation.

Applications of Neural Networks in Various Industries

Neural networks have found applications across a wide range of industries due to their versatility and effectiveness in handling complex tasks. In healthcare, for instance, deep learning models are used for medical image analysis, enabling radiologists to detect anomalies such as tumors in X-rays or MRIs with high accuracy. These models can analyze vast amounts of imaging data quickly, assisting healthcare professionals in making timely diagnoses and treatment decisions.

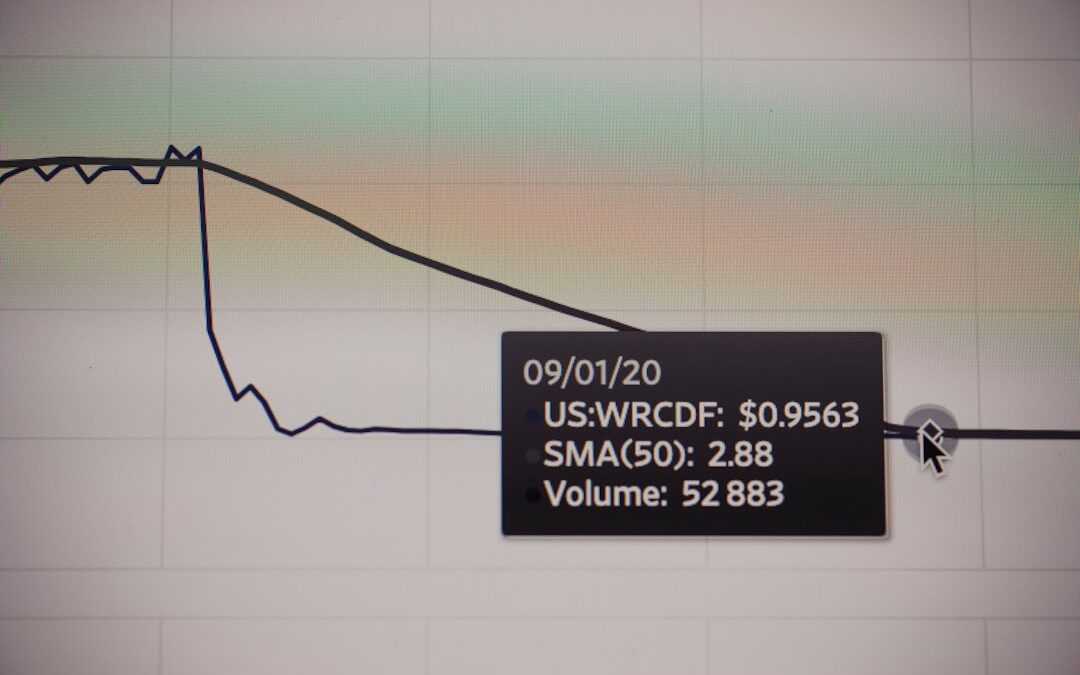

In finance, neural networks are employed for algorithmic trading and fraud detection. By analyzing historical market data and identifying patterns, these models can predict stock price movements or detect unusual transaction behaviors indicative of fraud. Similarly, in natural language processing (NLP), neural networks power applications such as chatbots and language translation services.

Models like Transformers have revolutionized NLP by enabling machines to understand context and semantics in human language more effectively than ever before.

Common Misconceptions about Deep Learning

Debunking Common Misconceptions About Deep Learning

Despite its growing prominence, several misconceptions about deep learning persist among both practitioners and the general public. One common myth is that deep learning can solve any problem given enough data and computational resources. While deep learning excels at pattern recognition tasks, it is not a panacea for all challenges in artificial intelligence (AI).

Limitations of Deep Learning

Certain problems may require different approaches or simpler models that are more interpretable and easier to implement. Deep learning is not always the best solution, and other methods may be more suitable for specific tasks. For instance, traditional machine learning algorithms can be more effective for smaller datasets or simpler tasks.

Comparing Deep Learning to Traditional Machine Learning

Another misconception is that deep learning models are inherently superior to traditional machine learning algorithms. While deep learning can outperform classical methods in specific domains—especially those involving large datasets—traditional algorithms like decision trees or support vector machines can still be highly effective.

Challenges in Interpretability and Transparency

Furthermore, deep learning models often require extensive tuning and may lack transparency in their decision-making processes, leading to challenges in interpretability that are less pronounced in simpler models. This lack of transparency can make it difficult to understand how deep learning models arrive at their conclusions, which can be a significant drawback in certain applications.

Challenges and Limitations of Neural Networks

Despite their impressive capabilities, neural networks face several challenges and limitations that researchers continue to address. One significant issue is the need for large amounts of labeled data for effective training. In many real-world scenarios, obtaining labeled datasets can be time-consuming and expensive.

This limitation has led to interest in semi-supervised learning techniques that leverage both labeled and unlabeled data or transfer learning approaches that adapt pre-trained models to new tasks with limited data. Another challenge is the computational cost associated with training deep neural networks. Training large models can require substantial computational resources and time, making it less accessible for smaller organizations or individual researchers without access to high-performance computing infrastructure.

Additionally, neural networks are often criticized for their lack of interpretability; understanding how a model arrives at a particular decision can be difficult due to their complex architectures and numerous parameters.

The Future of Deep Learning and Neural Networks

The future of deep learning and neural networks appears promising as advancements continue to emerge across various fronts. Researchers are exploring novel architectures that enhance efficiency and performance while reducing computational costs. For instance, techniques like neural architecture search automate the design of neural networks tailored for specific tasks, potentially leading to more effective models with less human intervention.

Moreover, there is growing interest in developing more interpretable AI systems that provide insights into their decision-making processes. Techniques such as explainable AI (XAI) aim to bridge the gap between model complexity and human understanding by offering explanations for predictions made by neural networks. As ethical considerations surrounding AI become increasingly important, ensuring transparency and accountability in AI systems will be crucial.

Additionally, interdisciplinary collaborations are likely to drive innovation in deep learning applications across various fields such as robotics, autonomous vehicles, and personalized medicine. As researchers continue to push the boundaries of what neural networks can achieve, we can expect transformative impacts on society through enhanced automation, improved decision-making processes, and novel solutions to complex problems across diverse industries.

If you’re interested in the foundational aspects of AI and machine learning, you might find the article “Demystifying Deep Learning: An Introduction to Neural Networks” quite enlightening. For those looking to explore further into how AI technologies are being integrated into business solutions, particularly in product development and management, I recommend reading about Product Engineering Services Using Microsoft SharePoint. This article delves into the practical applications of AI and software solutions in streamlining business processes, which complements the theoretical insights provided on neural networks.