In the rapidly evolving landscape of artificial intelligence (AI), the significance of data quality cannot be overstated. Data serves as the foundation upon which AI systems are built, influencing their performance, reliability, and overall effectiveness. High-quality data is characterized by its accuracy, completeness, consistency, and relevance, all of which are essential for training robust AI models.

As organizations increasingly rely on AI to drive decision-making processes, the need for meticulous attention to data quality has become paramount. The implications of data quality extend beyond mere technical performance; they touch upon ethical considerations, regulatory compliance, and the trustworthiness of AI systems. The importance of data quality is underscored by the fact that AI applications are only as good as the data they are trained on.

For instance, a machine learning model designed to predict customer behavior will yield unreliable results if it is trained on outdated or biased data. This reality has prompted organizations to invest in data governance frameworks that prioritize data quality at every stage of the AI lifecycle. As we delve deeper into the intricacies of data quality in AI applications, it becomes evident that ensuring high-quality data is not merely a technical challenge but a strategic imperative that can determine the success or failure of AI initiatives.

Key Takeaways

- Data quality is crucial for the success of AI applications as it directly impacts their performance and reliability.

- Poor data quality can lead to biased, inaccurate, or unreliable AI models, affecting decision-making and outcomes.

- Ensuring high data quality in AI training involves data cleaning, preprocessing, and validation to improve model accuracy and generalization.

- Consequences of poor data quality in AI include biased decision-making, reduced trust in AI systems, and potential legal and ethical implications.

- Best practices for ensuring data quality in AI applications include data governance, quality assurance processes, and continuous monitoring and improvement efforts.

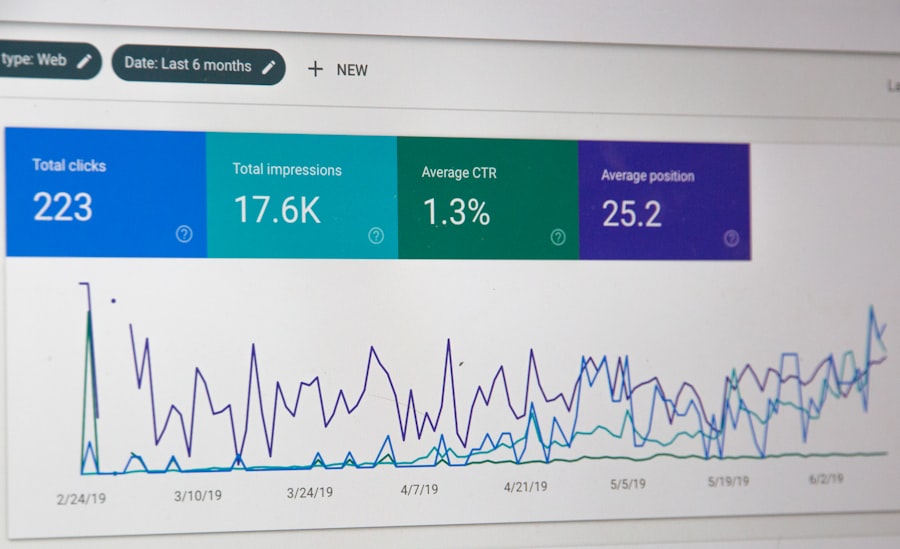

The Impact of Data Quality on AI Performance

The performance of AI systems is intricately linked to the quality of the data used in their development. High-quality data enhances the accuracy and reliability of AI models, enabling them to make informed predictions and decisions. For example, in healthcare applications, AI algorithms trained on comprehensive and accurate patient data can significantly improve diagnostic accuracy and treatment recommendations.

Conversely, if the training data is flawed or incomplete, the resulting model may produce erroneous outputs, leading to potentially harmful consequences. Moreover, the impact of data quality extends beyond initial training; it also affects the model’s ability to generalize to new, unseen data. A model trained on high-quality, diverse datasets is more likely to perform well in real-world scenarios.

In contrast, a model that has been trained on biased or unrepresentative data may struggle to adapt when faced with new inputs. This phenomenon is particularly evident in natural language processing (NLP) applications, where models trained on a narrow range of linguistic styles or dialects may fail to understand or generate text in different contexts. Thus, ensuring high data quality is crucial not only for achieving optimal performance but also for fostering adaptability and resilience in AI systems.

Understanding the Role of Data Quality in AI Training

Data quality plays a pivotal role in the training phase of AI development. During this phase, algorithms learn patterns and relationships from the input data, which directly influences their predictive capabilities. The training process involves feeding large volumes of data into machine learning models, where they identify correlations and make adjustments based on feedback.

If the input data is riddled with inaccuracies or inconsistencies, the model’s learning process can be severely compromised. For instance, consider a facial recognition system that relies on a dataset containing images of individuals from various demographics. If this dataset lacks representation from certain ethnic groups or contains mislabeled images, the model may develop biases that lead to higher error rates for underrepresented populations.

This not only undermines the effectiveness of the technology but also raises significant ethical concerns regarding fairness and discrimination. Therefore, it is essential for organizations to implement rigorous data validation processes during training to ensure that the datasets used are both comprehensive and representative.

The Consequences of Poor Data Quality in AI

The ramifications of poor data quality in AI applications can be profound and far-reaching. One immediate consequence is the degradation of model performance, which can manifest as inaccurate predictions or decisions. In sectors such as finance, where AI is employed for credit scoring or fraud detection, poor data quality can lead to significant financial losses and reputational damage.

For example, an AI system that inaccurately assesses an individual’s creditworthiness due to flawed historical data may deny loans to deserving applicants while approving loans for high-risk individuals. Beyond performance issues, poor data quality can also result in ethical dilemmas and legal repercussions. As AI systems become more integrated into critical decision-making processes—such as hiring practices or law enforcement—there is an increasing scrutiny on their fairness and accountability.

If an AI model is trained on biased or incomplete data, it may perpetuate existing inequalities or introduce new forms of discrimination. This has led to calls for greater transparency in AI systems and stricter regulations governing their use. Organizations must recognize that neglecting data quality not only jeopardizes their operational success but also poses significant ethical challenges that could undermine public trust in AI technologies.

Best Practices for Ensuring Data Quality in AI Applications

To mitigate the risks associated with poor data quality, organizations must adopt best practices that prioritize data integrity throughout the AI lifecycle. One fundamental practice is implementing robust data governance frameworks that establish clear guidelines for data collection, storage, and usage. This includes defining roles and responsibilities for data stewardship and ensuring that all stakeholders understand the importance of maintaining high-quality datasets.

Another critical practice involves conducting regular audits and assessments of existing datasets to identify and rectify any inaccuracies or inconsistencies. Organizations should invest in automated tools that facilitate data cleaning and validation processes, allowing them to maintain up-to-date and reliable datasets. Additionally, fostering a culture of continuous improvement within teams can encourage proactive identification of potential data quality issues before they escalate into larger problems.

Collaboration with domain experts is also essential for ensuring data quality. By involving subject matter experts in the data curation process, organizations can enhance their understanding of what constitutes high-quality data within specific contexts. This collaborative approach not only improves the relevance and accuracy of datasets but also helps build trust among stakeholders who rely on AI systems for critical decision-making.

The Relationship Between Data Quality and AI Ethics

The intersection of data quality and AI ethics is an increasingly important area of focus as organizations grapple with the societal implications of their technologies. High-quality data is essential for developing ethical AI systems that promote fairness, accountability, and transparency. When datasets are biased or incomplete, they can lead to discriminatory outcomes that disproportionately affect marginalized communities.

This raises ethical questions about the responsibility of organizations to ensure that their AI systems do not perpetuate existing inequalities. Moreover, ethical considerations extend beyond the immediate impact of AI decisions; they also encompass issues related to privacy and consent. Organizations must be vigilant about how they collect and use personal data, ensuring that individuals’ rights are respected throughout the process.

This includes obtaining informed consent from individuals whose data is being used and implementing measures to protect sensitive information from unauthorized access or misuse. As public awareness of these issues grows, there is increasing pressure on organizations to adopt ethical frameworks that prioritize data quality as a fundamental principle. By committing to ethical practices in data management, organizations can not only enhance the performance of their AI systems but also build trust with users and stakeholders who are increasingly concerned about the implications of AI technologies.

The Future of Data Quality in AI

Looking ahead, the future of data quality in AI applications will be shaped by several emerging trends and technological advancements. One notable trend is the increasing reliance on automated tools for data management and quality assurance. As organizations generate vast amounts of data at an unprecedented pace, manual processes for ensuring data quality may become untenable.

Automation can streamline data cleaning, validation, and monitoring processes, allowing organizations to maintain high-quality datasets more efficiently. Additionally, advancements in machine learning techniques themselves may contribute to improved data quality management. For instance, anomaly detection algorithms can identify outliers or inconsistencies within datasets, enabling organizations to address potential issues proactively.

Furthermore, as federated learning gains traction—where models are trained across decentralized datasets without sharing raw data—ensuring data quality will require new strategies for collaboration and validation among multiple stakeholders. The growing emphasis on explainability in AI will also influence how organizations approach data quality. As stakeholders demand greater transparency regarding how AI models make decisions, there will be a heightened focus on understanding the role that specific datasets play in shaping model behavior.

This necessitates a commitment to maintaining high-quality datasets that are not only accurate but also representative of diverse perspectives.

The Imperative of Data Quality in AI Applications

In conclusion, the imperative of ensuring high-quality data in AI applications cannot be overstated. As organizations increasingly leverage AI technologies across various sectors—from healthcare to finance—the consequences of neglecting data quality become more pronounced. High-quality datasets are essential for optimizing model performance, fostering ethical practices, and building trust with users and stakeholders alike.

As we navigate this complex landscape, it is crucial for organizations to adopt best practices that prioritize data integrity at every stage of the AI lifecycle. By investing in robust governance frameworks, leveraging automation tools, and collaborating with domain experts, organizations can enhance their ability to deliver reliable and ethical AI solutions. Ultimately, a commitment to high-quality data will not only drive operational success but also contribute to a more equitable and trustworthy future for artificial intelligence.

Ensuring high data quality is crucial for the success of AI applications, as it directly impacts their performance and reliability. For further reading on technology solutions that enhance business processes, you might find the article on Product Engineering Services Using Microsoft SharePoint insightful. This article discusses how leveraging Microsoft SharePoint for product engineering can streamline workflow, improve collaboration, and ultimately enhance the quality of data management practices, which is essential for any AI-driven system.